The state of art in ‘Monitoring and Evaluation’ and ‘Impact Evaluation’ practices

Reflections on the UK Evaluation Society Annual Conference (2013)

The UK Evaluation Society (UKES) Annual Conference is a unique opportunity to share knowledge and experience with other Monitoring and Evaluation (M&E) and Impact Evaluation (IE) professionals. Three main points for reflection inspired by the two-days of presentations and debates of this year’s edition that I would like to highlight here:

- The definition of Value For Money (VFM), that during a session facilitated by Save The Children was broken down into economic vs. operational VFM — the former as economic benefit coming from the implementation of the programme, the latter as the intrinsic value of the service (often having a public nature) provided by the activity (having better quality education is good per se, not dependent on the cost of provision);

- The IE community now is ready to leave behind the (sterile?) debate qualitative vs. quantitative methodologies for IE – a theory based mixed approach is considered to tacke more efficiently with the complexity of society; also, different methodologies serve different proposes — it is up to the evaluator to select the ‘right one’ each time;

- The challenge that M&E and IE specialists encounter not to be considered as a threat but a resource by the organisations they work in; this is a reciprocal learning process, in which the M&E specialist deeply understands rationale, complexities, assumptions and risks of the activities and the programme managers use rigorously tested practices to improve delivery and operational and economic VFM.

The Conference was also an opportunity to present INASP‘s approach to ensure both that IEs are carried out in a rigorous way and that the learning is shared with and informs the activity of relevant stakeholders.

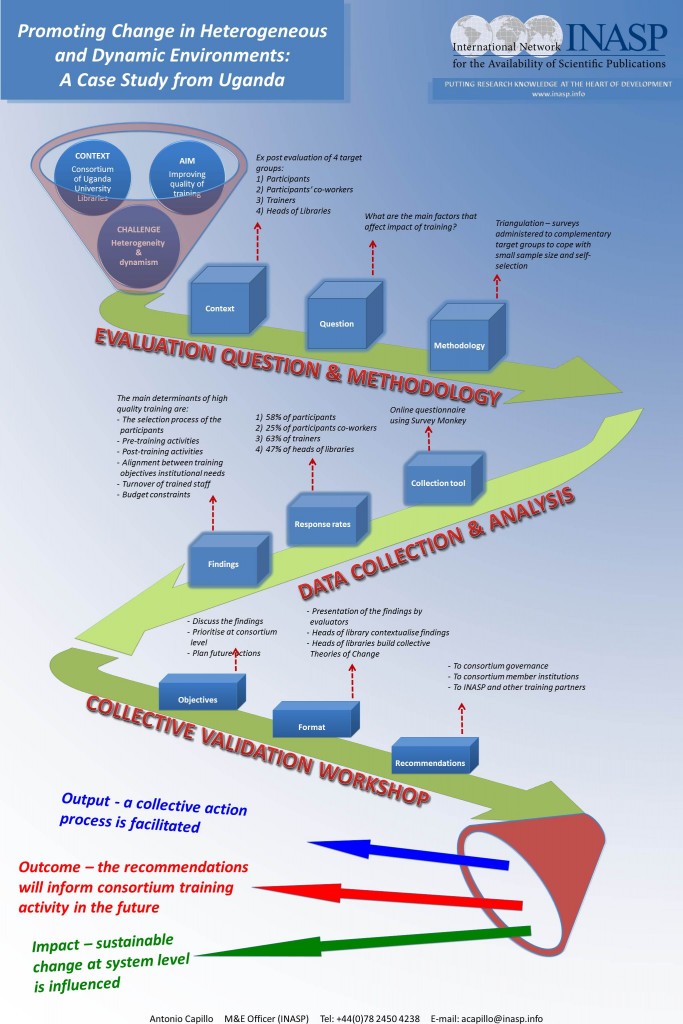

As INASP’s M&E Officer, my poster Promoting Change in Heterogeneous and Dynamic Environments: A Case-Study From Uganda (awarded as best Conference Poster), presented INASP’s approach in carrying out an ex-post small N IE of effectiveness of training delivered by INASP and the Consortium of Uganda University Libraries (CUUL) in the period 2010-2012.

First the evaluation questions were formulated and methodology was defined — a triangulation-approach to tackle with issues of small population size (small N) and self-selection in the treatment group; second, data collection and analysis was carried out — by using ‘cross-fire’ online questionnaires administered to four different target groups and identifying both common trends and contradictions in responses ; third, the findings were discussed and a set of actions (policy recommendations) was planned during a validation workshop facilitated by INASP with the head of the libraries of member institutions of CUUL.

If you want to know more about the discussed issues, you may find useful the following resources:

On VFM — Emmi et Al. 2011, ITAD 2010; On Theory-Based approaches — 3ie 2009 and LSE 2012; on methodologies — DFID 2013, DFID 2012, Better Evaluation and Newman 2012.

I will appreciate any other perspective.

(This post was originally posted on Development in Progress)

Thanks Antonio for sharing your experience at the conference, and congratulations on winning an award for your poster!

Thank you Ravi