Helping researchers distinguish credible journals

I recently spoke as part of a webinar about the challenge of journals with dubious publishing practices. This is an issue that INASP keeps a close eye on. Through AuthorAID, we work with researchers who are looking to ensure they publish their research in a reputable journal. In addition, we work with genuine journals in the Global South that can be unfairly mistrusted because of their geography and small size. This post is based on the webinar.

I started my webinar talk by sharing an example of a conversation with a researcher who was looking for advice about paying an APC for an article he had just had accepted for a journal. The journal had an impressive and professional-looking website. However, I noticed a few odd things: the editors listed weren’t all at the institution attributed to them and they didn’t mention this journal in their biographies. Perhaps most odd, putting the postcode of the UK office address for this publisher into Google gave me a photo of somebody’s house. I recommended to the researcher that he seek advice on the AuthorAID network about alternative journals.

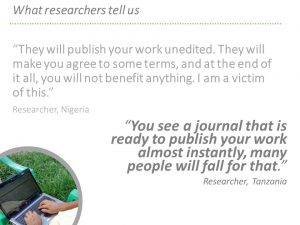

This could be a one-off but we know from the many experiences shared with us via AuthorAID that this scenario is sadly all too common (I recommend this great blog post from my colleague Andy Nobes from the AuthorAID project, where he goes through a list of journals and flags up things that look suspicious).

In some recent AuthorAID research that we hope to publish soon about experiences of and attitudes to open access in the Global South, we found that 6% of the researchers we surveyed had reported having published in journals with deceitful publishing practices. We suspect that the 6% is a significant under estimate as this only covers the people who have found out that they have done so and anecdotes suggest that many don’t discover the problems with the places they have published until much later.

estimate as this only covers the people who have found out that they have done so and anecdotes suggest that many don’t discover the problems with the places they have published until much later.

And in some cases the downsides are not immediately obvious. For example, many institutions are not aware of which journals should be avoided and so publication in these journals can still count for promotion – until the researcher wants to move to another institution that is aware of the quality of these publications.

This is not just a problem for researchers in the Global South, of course, but for researchers all over the world. It is also, we find, not just a problem for authors. There are many good and reputable journals published in the Global South that are a potential source of research for scholars and also somewhere they may choose to publish in. We see, for example, that two professors in the UK regularly publish in medical journals on the Nepal Journals Online platform because their research relates to Nepal and they see these journals as the best avenue for reaching the readers they want to connect with.

Often these journals are primarily small, scholar-led initiatives, a far cry from the multi-national publishing giants that dominate the Journal Impact Factor lists. And this can be a challenge for them. We see distrust of legitimate journals because of crude assumptions about geography and other observations. An impressive-looking website is not a guarantee of journal quality – and nor is an amateur-looking site necessarily an indication of poor quality processes. Likewise, size of APCs does not in itself say anything about whether or not a journal has a robust peer review process or a clear ethics policy, for example.

A request we kept receiving through our Journals Online project was that genuine journals in the Global South want support with and recognition of the quality of their publishing processes. This is why African Journals Online and INASP launched Journal Publishing Practices and Standards – or JPPS – last year.

The JPPS Framework provides:

- An educational tool

- A formal process for assessing new journals

- A process for improving publishing quality

- Public acknowledgement of high standards

- Guidance to researchers on the standards of journals

- Assessment

The 108 detailed JPPS criteria were developed after extensive research of international indexes. Journals are awarded one of six badges based on the criteria that they meet. We recently blogged about the tremendous positive support that this initiative has received from editors we work with.

Thinking and checking before submitting

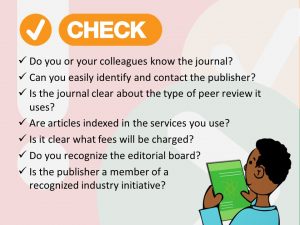

As they are displayed on the Journals Online platforms the new JPPS badges are now also part of the Think. Check. Submit. (TCS) checklist. TCS is a cross-industry initiative led by representatives from ALPSP, DOAJ, INASP, ISSN, LIBER, OASPA, STM, UKSG, and individual publishers to help people recognize and avoid dubious journals.

The idea of TCS is simple: it recognizes the complex landscape for authors and readers and, as the name suggests, reminds people to think and check before submitting – or reading.

Its checklist is not a blacklist and it is not a whitelist; it is a campaign to help people think through good journal selection processes. And, one of my favourite aspects of it as someone involved in challenging the North America/western Europe biases in scholarly communication, it is available in multiple languages – more than 30 at the last count.

journal selection processes. And, one of my favourite aspects of it as someone involved in challenging the North America/western Europe biases in scholarly communication, it is available in multiple languages – more than 30 at the last count.

Of course, avoiding dubious publishing practices goes beyond following a checklist. Journals that set out to deceive and separate people from their money, like all scams, can be amateur but they can also be extremely cleverly done. Some unscrupulous journals may be adept at mimicking the look and feel of high-quality journals – or even stealing their identities so it is easy to be fooled.

And the issue we hear time and again through AuthorAID is that people may not be aware of how to check journals or what publishing terms mean. One thing that we are doing now at INASP is not just encouraging people to use TCS but also to feedback where things are unclear or need more detail so that we can feed that back to the TCS committee and build on this checklist going forward. It would be great to hear from anyone reading this post about any things that would help to make the TCS checklist more useful.

Researchers need to be absolutely sure they can trust a journal before publishing their hard work there – and budget holders need to be assured that the journals are trustworthy before releasing money for APCs.

Previous Post

Previous Post Next Post

Next Post